5 Built-in iPhone Features That Can Help ELs

With the launch of new a iPhone inevitably comes a new iOS. (If you’re an Android user, I haven’t forgotten about you! I’ll be covering the same topic for Androids next month!) A lot of English language learners download all sorts of apps to help them with their vocabulary, grammar, listening, and more. But what many iPhone users don’t know is that Apple has a long reputation of growing useful accessibility features that can help students (and anyone else who wants to learn) get more access to English training with only a few taps on the screen.

Let’s look at five built-in iPhone features, some unsung and some brand new, that can boost learning for dedicated students.

1. Text Size

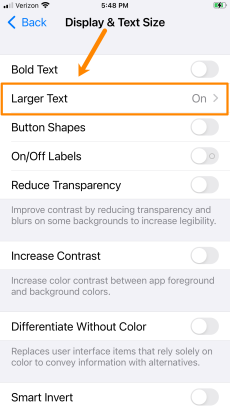

I’ll be the first to admit that display and text size is not an obvious benefit to language learning, but it can make a big difference to many learners who don’t understand how the tech is built to support them. Older students or those with visual impairments will quickly find the value of a larger default text size to help them distinguish between easily confused letters and shapes. It won’t solve all their language learning problems, but reducing the anxieties and expectations that come with the wildly inconsistent spelling standards in English can be a benefit. Making English just a little easier to read is one way to lower barriers to entry.

Where to find it: Settings > Accessibility > Display & Text Size > Larger Text

Change the font size to recognize letters and words more easily.

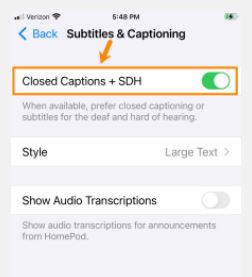

2. Subtitles & Captioning

Subtitles and captions are a godsend for language learners. Whether they’re using them to follow along with a video from beginning to end, or just to pick up the occasional obscure vocabulary word, being able to read along with the video is a standard choice for many learners.

Turning on subtitles and captioning helps other apps that play videos activate the captions by default rather than having to search them out. One nice feature is that it even activates on YouTube previews so students can see some of what the videos are talking about without committing to watching. This is a great feature, and students will wonder how they ever lived without it.

Where to find it: Settings > Accessibility > Subtitles & Captioning > Toggle on “Closed Captions & SDH”

Activate subtitles to read along with videos.

Use focus to eliminate distractions.

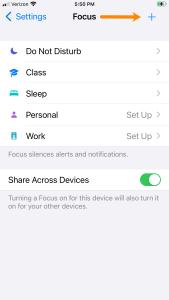

3. Focus

Brand new to iOS 15 is a feature called “Focus.” Focus is not a tool to develop language, but if your students are anything like mine, they could use some help avoiding the distractions on their phone while trying to master a language as complicated as English.

At first glance, Focus seems to be an advanced “Do Not Disturb” feature, allowing you to pick times when, say, text messages can’t come through (*ahem—check the syllabus for class hours). While this can be useful, there’s actually an option to make an entirely custom iPhone screen that only shows the apps you’ve selected to be available during your given time periods. Psychologically, the difference between “no notifications from Instagram from 9 am–11 am” and “I can only see and access Google Docs, Flipgrid, and Newsela from 9 am–11 am” is HUGE.

Yes, your students will have to turn it on and make the choice that they want to use this, but those that do make the choice are taking control of their easily distracted brain (SQUIRREL!) and positioning the tech to work for them in their studies rather than against them.

Focus is deeply customizable, so make sure to do some research to find all the ways it can be built around your students’ needs.

Where to find it: Settings > Focus OR Control Center > Focus

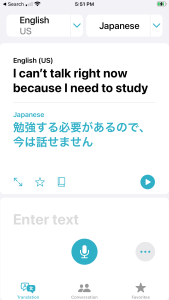

4. Translate

Translate is an app built into iOS that many people are unaware of. It originally got off to a bumpy start, but these days it’s transitioning from ugly duckling to beautiful swan. The basic app has grown a bit, but it’s still fairly straightforward: Enter text to be translated, and your translation will show up. The conversation mode allows learners to talk to someone by holding the phone between them, and as they speak transcribed words show up in their own language and vice-versa.

The best part about translate, though, is that it’s now system wide in the iPhone. That means that any text, no matter where it is in your phone, can be tapped and translated. Choosing a single word or a whole selection, then tapping “translate” on the pop-up bar will give students a (pretty accurate, by my informal checking) translation. There are also options to save translations for further exploration in the future.

Of course, we don’t always want our students relying on translation, but you can’t convince me that direct translation hasn’t saved the day before.

Note: When students open the Translate app there’s an option to improve Siri & Dictation. This may, over time, improve the iPhone’s ability to understand a wider variety of World English accents, but the trade-off is in giving up some of your privacy.

Where to find it: The Translate app is installed by default. Accessing it system wide simply requires highlighting text on the iPhone.

Access Translate anywhere in iOS.

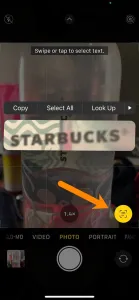

Live Text instantly recognizes words in photos and screenshots.

5. Live Text

Live Text is an excellent feature that may look familiar to those who have used Google Lens in the past. Without any extra effort, Live Text is built directly into the iPhone camera, recognizing text and asking users if they want to copy it into the clipboard as text. That’s right! TEXT! This means students can take a picture of a sign they don’t understand, or directions on a prescription, and have it fully searchable right in their phone.

And of course (drumroll), students can combine Live Text with the systemwide Translate feature. That’s right: Take a picture, tap the word you don’t know, and get it translated. No more guessing at it, jumping back and forth into a dictionary while ensuring that the spelling is right, and so on. Everything is available with a tap.

Note: Live Text is only available on iPhone X and later

Where to find it: Settings > Camera > Live Text. Live Text will show up in your Camera app as an icon in the bottom right of the photo.

There are many more features that I couldn’t get to today, but a little exploration in the phone can open a world of possibilities for language learners. Do you know of a feature or service that helps your students learn? Share it in the comments, we’d all love to know more.